In the first part, I’ve talked about the best practices and I’ve shown a glimpse on how things can be done.

Right now, I want to focus on building two simple modules from scratch and showing you how easy they can work together.

I am going to build:

- A Kubernetes Module for Azure (AKS)

- A Helm Module that will be able to deploy helm charts inside of Kubernetes clusters

- A repository that leverages the two modules in order to deploy a Helm chart inside of AKS

Prerequisites

In order to follow this tutorial yourself, you are going to need some experience with Terraform, Kubernetes, Helm, pre-commit and git.

I would suggest installing all of them by following the instructions present in the tools documentation:

- Terraform

- Kubectl

- Helm

- Pre-commit

- azure cli

- A text editor of you choice (I’m using Visual Studio Code, but feel free to use any editor you like)

You should have an Azure account. In order to create a free one you can go here.

AKS Module

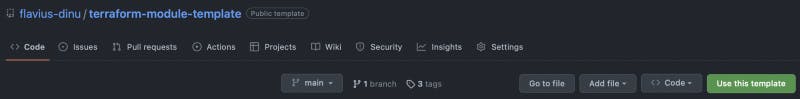

I will first start by leveraging my terraform-module-template and create a repository from it, by clicking on Use this template.

After this, I just cloned my repository, and now I’m prepared to start the development.

By using the above template, I have the following folder structure for the Terraform code:

.

├── README.md # Module documentation

├── example # One working example based on the module

│ ├── main.tf # Main code for the example

│ ├── outputs.tf # Example outputs

│ └── variables.tf # Example variables

├── main.tf # Main code for the module

├── outputs.tf # Outputs of the module

├── provider.tf # Required providers for the modules

└── variables.tf # Variables of the module

The starting point of the development will be the root main.tf file.

Let’s first understand what we have to build. To do that, Terraform documentation is our best friend.

I navigated to the resource documentation and from the start, I looked at an example to understand the minimum parameters I require in order to build the module.

As I just want to build a simple module for demo purposes and without too many fancy features, I will just get the absolute necessary parameters. In the real world, this is not going to happen, so make sure you give a thorough reading of the Argument Reference of the resource page.

resource "azurerm_kubernetes_cluster" "this" {

for_each = var.kube_params

name = each.value.name

location = each.value.rg_location

resource_group_name = each.value.rg_name

dns_prefix = each.value.dns_prefix

I’ve started pretty small, just adding some essential parameters that are required to create a cluster.

A variable called kube_params was defined in order to add all of our cluster related parameters. All the parameters from above will be mandatory in our module, due to the fact that I’ve defined them with each.value.**something, where something can be whatever you want (just add something that makes sense for that particular parameter).

I’ve continued to add some mandatory blocks inside of this resource:

default_node_pool {

enable_auto_scaling = lookup(each.value, "enable_auto_scaling", true)

max_count = lookup(each.value, "enable_auto_scaling", true) ? lookup(each.value, "max_count", 1) : null

min_count = lookup(each.value, "enable_auto_scaling", true) ? lookup(each.value, "min_count", 1) : null

node_count = lookup(each.value, "node_count", 1)

vm_size = lookup(each.value, "vm_size", "Standard_DS2_v2")

name = each.value.np_name

}

dynamic "service_principal" {

for_each = lookup(each.value, "service_principal", [])

content {

client_id = service_principal.value.client_id

client_secret = service_principal.value.client_secret

}

}

dynamic "identity" {

for_each = lookup(each.value, "identity", [])

content {

type = lookup(identity.value, "type", "SystemAssigned")

identity_ids = lookup(identity.value, "identity_ids", null)

}

}

tags = merge(var.tags, lookup(each.value, "tags", {}))

In the above example, you are going to see many of the best practices that I’ve mentioned in my previous post: use of dynamic blocks, ternary operators and functions.

Wherever you see a lookup, I am also providing a default value to that parameter. For the dynamic blocks, by default, I am not going to create any block if the parameter is not present.

For the tags, I’ve noticed that in many cases, companies want to be able to add some global tags to resources and, of course, individual tags for a particular resource. In order to accommodate that, I’m simply merging two map variables: one that exists inside of kube_params and another one that will exist in the tags var.

As some may want to export the kubeconfig of the cluster after it is created, I’ve provided that option also:

resource "local_file" "kube_config" {

for_each = { for k, v in var.kube_params : k => v if lookup(v, "export_kube_config", false) == true }

filename = lookup(each.value, "kubeconfig_path", "~./kube/config")

content = azurerm_kubernetes_cluster.this[each.key].kube_config_raw

}

In order to export the kubeconfig, you will simply need to have a parameter in kube_params called export_kube_config set to true.

This is how my main.tf file looks like in the end:

resource "azurerm_kubernetes_cluster" "this" {

for_each = var.kube_params

name = each.value.name

location = each.value.rg_location

resource_group_name = each.value.rg_name

dns_prefix = each.value.dns_prefix

default_node_pool {

enable_auto_scaling = lookup(each.value, "enable_auto_scaling", true)

max_count = lookup(each.value, "enable_auto_scaling", true) ? lookup(each.value, "max_count", 1) : null

min_count = lookup(each.value, "enable_auto_scaling", true) ? lookup(each.value, "min_count", 1) : null

node_count = lookup(each.value, "node_count", 1)

vm_size = lookup(each.value, "vm_size", "Standard_DS2_v2")

name = each.value.np_name

}

dynamic "service_principal" {

for_each = lookup(each.value, "service_principal", [])

content {

client_id = service_principal.value.client_id

client_secret = service_principal.value.client_secret

}

}

dynamic "identity" {

for_each = lookup(each.value, "identity", [])

content {

type = lookup(identity.value, "type", "SystemAssigned")

identity_ids = lookup(identity.value, "identity_ids", null)

}

}

tags = merge(var.tags, lookup(each.value, "tags", {}))

}

resource "local_file" "kube_config" {

for_each = { for k, v in var.kube_params : k => v if lookup(v, "export_kube_config", false) == true }

filename = lookup(each.value, "kubeconfig_path", "~./kube/config")

content = azurerm_kubernetes_cluster.this[each.key].kube_config_raw

}

For the variables.tf file, I just defined the kube_params and tags variables as they are the only vars that I’ve used inside of my module.

variable "kube_params" {

type = any

description = <<-EOF

name = string

rg_name = string

rg_location = string

dns_prefix = string

client_id = string

client_secret = string

vm_size = string

enable_auto_scaling = string

max_count = number

min_count = number

node_count = number

np_name = string

service_principal = list(object({

client_id = string

client_secret = string

}))

identity = list(object({

type = string

identity_ids = list(string)

}))

EOF

}

variable "tags" {

type = any

description = <<EOT

Global tags to apply to the resources.

EOT

default = {}

}

I’ve used any type for the variables, because I wanted to be able to omit some parameters by using default values in lookups for them. There is an experimental feature called defaults, that you can use instead and change the variable type to map(object), but it is not suitable for production use cases. More about that here.

For the variables, I’ve added the parameters inside of the description, but this is done because I want to generate nice documentation using tfdocs inside of pre-commit (I will get back to this later on).

output "kube_params" {

value = { for kube in azurerm_kubernetes_cluster.this : kube.name => { "id" : kube.id, "fqdn" : kube.fqdn } }

}

output "kube_config" {

value = { for kube in azurerm_kubernetes_cluster.this : kube.name => nonsensitive(kube.kube_config) }

}

output "kube_config_path" {

value = { for k, v in local_file.kube_config : k => v.filename }

}

I’ve defined three outputs, one for the most relevant parameters of the kubernetes cluster and two for the kubeconfig. The ones related to kubeconfig are giving users the possibility to login to the cluster by using the kubeconfig file or the kubernetes user/password/certificates combination.

You can use functions inside of the outputs and you are encouraged to do so, to accommodate your use case.

The module code is now ready, but you should at least have an example inside of it to show users how can they run the code.

So in the examples folder, in the main.tf file, I’ve added the following code:

provider "azurerm" {

features {}

}

module "aks" {

source = "../"

kube_params = {

kube1 = {

name = "kube1"

rg_name = "rg1"

rg_location = "westeurope"

dns_prefix = "kube"

identity = [{}]

enable_auto_scaling = false

node_count = 1

np_name = "kube1"

export_kube_config = true

}

}

}

I’ve added the provider configuration and called the module with some of the essential parameters. You can add a variable to the kube_params and provide values in the variable directly or in a tfvars file or even in an yaml file if you want.

In the above example, you are creating only one kubernetes cluster, if you want more, just copy & paste de kube1 block and add whatever parameters you want, based on the module similar to this:

provider "azurerm" {

features {}

}

module "aks" {

source = "../"

kube_params = {

kube1 = {

name = "kube1"

rg_name = "rg1"

rg_location = "westeurope"

dns_prefix = "kube"

identity = [{}]

enable_auto_scaling = false

node_count = 1

np_name = "kube1"

export_kube_config = true

}

kube2 = {

name = "kube2"

rg_name = "rg1"

rg_location = "westeurope"

dns_prefix = "kube"

identity = [{}]

enable_auto_scaling = false

node_count = 4

np_name = "kube2"

export_kube_config = false

}

kube3 = {

name = "kube3"

rg_name = "rg1"

rg_location = "westeurope"

dns_prefix = "kuber"

identity = [{}]

enable_auto_scaling = true

max_count = 4

min_count = 2

node_count = 2

np_name = "kube3"

export_kube_config = false

}

}

}

I’ve added 3 just for reference, you have full control related to the number of clusters you want to create.

Ok, let’s get back to the initial example. Before running the code you should first login to Azure:

az login

You may think we are done, right? Well, not yet, because I like to run pre-commit with all the goodies on my code. I am using the same pre-commit file I’ve mentioned in the previous post so before adding my code, I’m going to run:

pre-commit run --all-files

Before running this command, I made sure that in my README.md I had the following lines:

<!-- BEGINNING OF PRE-COMMIT-TERRAFORM DOCS HOOK -->

<!-- END OF PRE-COMMIT-TERRAFORM DOCS HOOK -->

Between these lines, tfdocs will populate the documentation based on your resources, variables, outputs and provider.

This is how the README looks like after running the pre-commit command:

README

Requirements

No requirements.

Providers

Modules

No modules.

Resources

| Name | Type |

| azurerm_kubernetes_cluster.this | resource |

| local_file.kube_config | resource |

Inputs

| Name | Description | Type | Default | Required |

| kube_params | name = string rg_name = string rg_location = string dns_prefix = string client_id = string client_secret = string vm_size = string enable_auto_scaling = string max_count = number min_count = number node_count = number np_name = string service_principal = list(object({ client_id = string client_secret = string })) identity = list(object({ type = string identity_ids = list(string) })) | any | n/a | yes |

| tags | Global tags to apply to the resources. | any | {} | no |

Outputs

| Name | Description |

| kube_config | n/a |

| kube_config_path | n/a |

| kube_params | n/a |

Now, this module is done, I’ve pushed all my changes and a new tag was created automatically in my repository (I will discuss about the pipelines I am using for that in another post).

Helm Release Module

All the steps are the same as for the other module, first we have to understand what we have to build by reading the documentation and understanding the parameters.

I’ve created a new repository using the same template and I’ve started the development per se. As I don’t want to repeat all the steps from above in this post, I will show you just the end result of the main.tf file for this module.

resource "helm_release" "this" {

for_each = var.helm

name = each.value.name

chart = each.value.chart

repository = lookup(each.value, "repository", null)

version = lookup(each.value, "version", null)

namespace = lookup(each.value, "namespace", null)

create_namespace = lookup(each.value, "create_namespace", false) ? true : false

values = [for yaml_file in lookup(each.value, "values", []) : file(yaml_file)]

dynamic "set" {

for_each = lookup(each.value, "set", [])

content {

name = set.value.name

value = set.value.value

type = lookup(set.value, "type", "auto")

}

}

dynamic "set_sensitive" {

for_each = lookup(each.value, "set_sensitive", [])

content {

name = set_sensitive.value.name

value = set_sensitive.value.value

type = lookup(set_sensitive.value, "type", "auto")

}

}

}

This module is also pushed, tagged and good to go.

Automation based on the Two Modules

I’ve created a third repository, but this time from scratch in which I will use the two modules that were built throughout this post.

provider "azurerm" {

features {}

}

module "aks" {

source = "git@github.com:flavius-dinu/terraform-az-aks.git?ref=v1.0.3"

kube_params = {

kube1 = {

name = "kube1"

rg_name = "rg1"

rg_location = "westeurope"

dns_prefix = "kube"

identity = [{}]

enable_auto_scaling = false

node_count = 1

np_name = "kube1"

export_kube_config = true

kubeconfig_path = "./config"

}

}

}

provider "helm" {

kubernetes {

config_path = module.aks.kube_config_path["kube1"]

}

}

# Alternative way of declaring the provider

# provider "helm" {

# kubernetes {

# host = module.aks.kube_config["kube1"].0.host

# username = module.aks.kube_config["kube1"].0.username

# password = module.aks.kube_config["kube1"].0.password

# client_certificate = base64decode(module.aks.kube_config["kube1"].0.client_certificate)

# client_key = base64decode(module.aks.kube_config["kube1"].0.client_key)

# cluster_ca_certificate = base64decode(module.aks.kube_config["kube1"].0.cluster_ca_certificate)

# }

# }

module "helm" {

source = "git@github.com:flavius-dinu/terraform-helm-release.git?ref=v1.0.0"

helm = {

argo = {

name = "argocd"

repository = "https://argoproj.github.io/argo-helm"

chart = "argo-cd"

create_namespace = true

namespace = "argocd"

}

}

}

So, in my repository, I am creating one Kubernetes cluster, in which I am deploying an ArgoCD helm chart. Due to the fact that the helm provider in both cases (commented and uncommented code) has an explicit dependency to module.aks.something it will first wait for the cluster to be ready and after that the helm chart will be deployed on it.

I’ve kept the commented part in the gist because I wanted to showcase the fact that you are able to connect to the cluster using two different outputs, and both solutions are working just fine.

You should use a remote state if you are collaborating or using the code in a production environment. I haven’t done that for this example, because it is just a simple demo.

Now the automation is done, so what we can do is:

terraform init

terraform plan

terraform apply

Both components are created successfully and if you login to your AKS cluster, you are going to see the ArgoCD related resources by running:

kubectl get all -n argocd

Repository Links

Useful Documentation

If you need some help to better understand some of the concepts that I’ve used throughout this post, I’ve put together a list of useful articles and component documentations:

For Each

Dynamic blocks

- https://spacelift.io/blog/terraform-dynamic-blocks

- https://www.terraform.io/language/expressions/dynamic-blocks

Pre-Commit